15/12/2023

“What gets measured gets managed.” (Peter Drucker)

Food waste is the food that is either thrown away or not eaten. This food waste is a problem that is receiving increasing attention and research worldwide. This attention focuses on the food ready to be consumed – food in transport, in shops, restaurants or at home. Unfortunately, the food that gets lost before it gets there – food lost on the farms, for instance, while harvesting or storing – is usually overlooked. First tentative estimates suggest that this loss is about 15% of total food production – but in fact, we don’t really have a good knowledge about this because it is not something we measure. This is because we don’t really know how to measure it in an efficient way yet. In this part of the FOLOU project, we are exploring whether new technologies such as drones and artificial intelligence can help us measure food loss on farms.

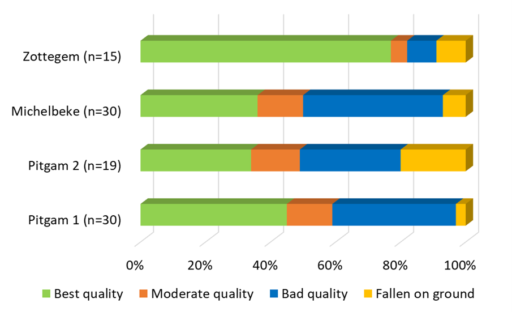

Here, we present the very first results of the summer campaign focusing on food loss in apple orchards. In five orchards of Jonagold apples in Belgium and Northern France, researchers from Ghent University picked all the apples from a number of trees, sorted them into piles according to quality and counted the number of apples in each pile (Figure 2). This showed that food losses are indeed high – in the best stand, about 1 in 5 apples was not fit for human consumption, in other sites it was as high as 1 in 2 apples (Figure 2).

Figure 1: Jonagold orchards

Figure 2: Sorting the apples in different classes

Figure 3: Apple statistics for different orchards (n is the number of trees)

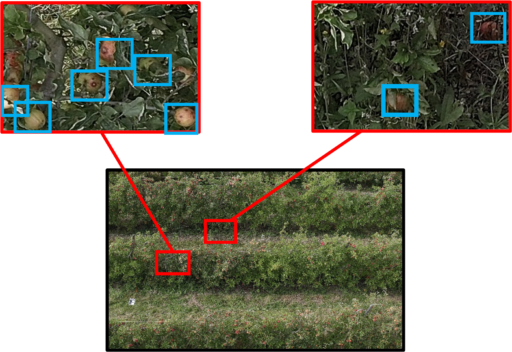

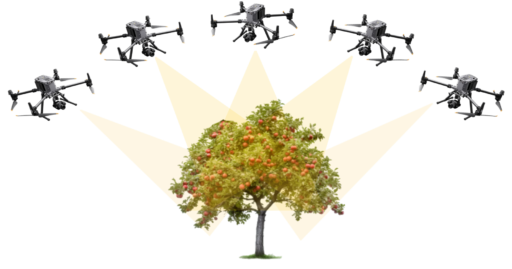

The actual goal of this research is to find out if a drone can be used to count and correctly categorize these apples. We therefore flew a drone over the orchard to collect highly detailed images of the canopy of the apple trees. With these images, we will now train an AI model that can automatically recognize apples and distinguish between good- and poor-quality apples.

Figure 4: Two drones have been used: DJI M350 with high resolution camera (left) and DJI M600 with lidar (right).

Figure 5: High Resolution RGB images, with a zoom on healthy and damaged apples.

Unfortunately, this is not going to be sufficient. Due to the dense canopy of apples, not all apples in an apple tree are visible. We therefore collected drone images from 5 different viewing angles, and now have to decide which viewing angle(s) works best (Figure 6). In addition, we repeated the flights to collect LiDAR data. This specialized sensor provides a detailed 3D model of the entire canopy. This dataset will be used to calculate a correction factor for apples that are still not visible on the images.

Figure 6: We collected photos of the apple trees from 5 different viewing angles, to find out how we can best count the apples on the trees.

What is next?

In the coming months, we will develop the AI model. Then we will see which viewing angle works best and how to adjust for apples that remain invisible even then.